4 Types Of Neural Circuits

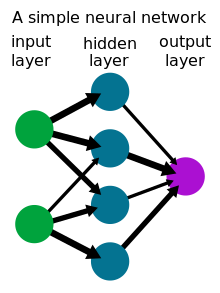

Simplified view of a feedforward artificial neural network

A neural network is a network or circuit of biological neurons, or, in a modern sense, an bogus neural network, composed of artificial neurons or nodes.[1] Thus, a neural network is either a biological neural network, made up of biological neurons, or an bogus neural network, used for solving bogus intelligence (AI) problems. The connections of the biological neuron are modeled in artificial neural networks as weights between nodes. A positive weight reflects an excitatory connection, while negative values mean inhibitory connections. All inputs are modified past a weight and summed. This activity is referred to as a linear combination. Finally, an activation role controls the aamplitude of the output. For example, an acceptable range of output is usually between 0 and 1, or information technology could be −1 and 1.

These bogus networks may exist used for predictive modeling, adaptive control and applications where they tin can be trained via a dataset. Cocky-learning resulting from experience tin occur inside networks, which can derive conclusions from a complex and seemingly unrelated gear up of information.[2]

Overview [edit]

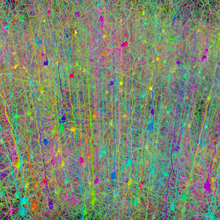

A biological neural network is composed of a group of chemically connected or functionally associated neurons. A single neuron may exist connected to many other neurons and the full number of neurons and connections in a network may exist extensive. Connections, called synapses, are usually formed from axons to dendrites, though dendrodendritic synapses[iii] and other connections are possible. Apart from electrical signalling, there are other forms of signalling that arise from neurotransmitter improvidence.

Artificial intelligence, cerebral modelling, and neural networks are information processing paradigms inspired by how biological neural systems procedure information. Artificial intelligence and cerebral modelling try to simulate some properties of biological neural networks. In the bogus intelligence field, artificial neural networks accept been practical successfully to speech communication recognition, image analysis and adaptive control, in order to construct software agents (in computer and video games) or democratic robots.

Historically, digital computers evolved from the von Neumann model, and operate via the execution of explicit instructions via access to memory past a number of processors. On the other paw, the origins of neural networks are based on efforts to model data processing in biological systems. Unlike the von Neumann model, neural network computing does not separate memory and processing.

Neural network theory has served to place improve how the neurons in the brain function and provide the footing for efforts to create artificial intelligence.

History [edit]

The preliminary theoretical base for gimmicky neural networks was independently proposed past Alexander Bain[4] (1873) and William James[5] (1890). In their piece of work, both thoughts and body activity resulted from interactions among neurons within the brain.

For Bain,[4] every action led to the firing of a certain gear up of neurons. When activities were repeated, the connections between those neurons strengthened. According to his theory, this repetition was what led to the formation of retentivity. The general scientific community at the time was skeptical of Bain's[4] theory considering it required what appeared to exist an inordinate number of neural connections within the brain. It is now credible that the brain is exceedingly complex and that the same brain "wiring" can handle multiple issues and inputs.

James's[five] theory was like to Bain's,[4] however, he suggested that memories and actions resulted from electrical currents flowing among the neurons in the brain. His model, past focusing on the flow of electrical currents, did non require individual neural connections for each memory or action.

C. S. Sherrington[7] (1898) conducted experiments to test James'due south theory. He ran electrical currents down the spinal cords of rats. Nonetheless, instead of demonstrating an increase in electrical electric current as projected by James, Sherrington found that the electric current strength decreased equally the testing continued over time. Chiefly, this work led to the discovery of the concept of habituation.

McCulloch and Pitts[8] (1943) created a computational model for neural networks based on mathematics and algorithms. They called this model threshold logic. The model paved the way for neural network inquiry to separate into 2 distinct approaches. One approach focused on biological processes in the brain and the other focused on the application of neural networks to artificial intelligence.

In the late 1940s psychologist Donald Hebb[9] created a hypothesis of learning based on the machinery of neural plasticity that is now known as Hebbian learning. Hebbian learning is considered to be a 'typical' unsupervised learning rule and its subsequently variants were early on models for long term potentiation. These ideas started beingness applied to computational models in 1948 with Turing's B-type machines.

Farley and Clark[10] (1954) first used computational machines, and so called calculators, to simulate a Hebbian network at MIT. Other neural network computational machines were created past Rochester, Holland, Addiction, and Duda[11] (1956).

Rosenblatt[12] (1958) created the perceptron, an algorithm for pattern recognition based on a 2-layer learning computer network using simple addition and subtraction. With mathematical note, Rosenblatt too described circuitry not in the basic perceptron, such as the exclusive-or excursion, a excursion whose mathematical computation could not be processed until after the backpropagation algorithm was created past Werbos[13] (1975).

Neural network research stagnated after the publication of car learning research by Marvin Minsky and Seymour Papert[14] (1969). They discovered two key issues with the computational machines that processed neural networks. The first issue was that single-layer neural networks were incapable of processing the exclusive-or circuit. The 2nd significant issue was that computers were not sophisticated plenty to effectively handle the long run time required past large neural networks. Neural network enquiry slowed until computers achieved greater processing power. Too key in later advances was the backpropagation algorithm which finer solved the exclusive-or problem (Werbos 1975).[13]

In the belatedly 1970s to early 1980s, interest briefly emerged in theoretically investigating the Ising model in relation to Cayley tree topologies and large neural networks. In 1981, the Ising model was solved exactly for the general case of closed Cayley trees (with loops) with an arbitrary branching ratio [fifteen] and found to exhibit unusual phase transition behavior in its local-apex and long-range site-site correlations.[16] [17]

The parallel distributed processing of the mid-1980s became popular under the proper noun connectionism. The text past Rumelhart and McClelland[xviii] (1986) provided a full exposition on the utilize of connectionism in computers to simulate neural processes.

Neural networks, equally used in artificial intelligence, take traditionally been viewed as simplified models of neural processing in the brain, even though the relation between this model and brain biological architecture is debated, as it is not clear to what degree bogus neural networks mirror encephalon function.[19]

Artificial intelligence [edit]

A neural network (NN), in the case of artificial neurons chosen artificial neural network (ANN) or fake neural network (SNN), is an interconnected grouping of natural or bogus neurons that uses a mathematical or computational model for information processing based on a connectionistic approach to computation. In most cases an ANN is an adaptive system that changes its construction based on external or internal data that flows through the network.

In more applied terms neural networks are non-linear statistical data modeling or decision making tools. They can be used to model complex relationships betwixt inputs and outputs or to detect patterns in data.

An artificial neural network involves a network of uncomplicated processing elements (artificial neurons) which can exhibit circuitous global behavior, adamant by the connections between the processing elements and element parameters. Artificial neurons were start proposed in 1943 by Warren McCulloch, a neurophysiologist, and Walter Pitts, a logician, who offset collaborated at the University of Chicago.[20]

Ane classical type of artificial neural network is the recurrent Hopfield network.

The concept of a neural network appears to have first been proposed by Alan Turing in his 1948 paper Intelligent Machinery in which he called them "B-blazon unorganised machines".[21]

The utility of artificial neural network models lies in the fact that they can be used to infer a function from observations and also to utilize it. Unsupervised neural networks can as well be used to learn representations of the input that capture the salient characteristics of the input distribution, east.g., run across the Boltzmann motorcar (1983), and more recently, deep learning algorithms, which can implicitly learn the distribution role of the observed data. Learning in neural networks is particularly useful in applications where the complexity of the data or task makes the design of such functions past manus impractical.

Applications [edit]

Neural networks tin can be used in different fields. The tasks to which artificial neural networks are practical tend to fall within the following wide categories:

- Part approximation, or regression assay, including fourth dimension series prediction and modeling.

- Nomenclature, including pattern and sequence recognition, novelty detection and sequential determination making.

- Information processing, including filtering, clustering, blind indicate separation and compression.

Awarding areas of ANNs include nonlinear system identification[22] and command (vehicle command, process command), game-playing and decision making (backgammon, chess, racing), pattern recognition (radar systems, confront identification, object recognition), sequence recognition (gesture, speech, handwritten text recognition), medical diagnosis, financial applications, data mining (or knowledge discovery in databases, "KDD"), visualization and e-mail spam filtering. For instance, information technology is possible to create a semantic profile of user's interests emerging from pictures trained for object recognition.[23]

Neuroscience [edit]

Theoretical and computational neuroscience is the field concerned with the analysis and computational modeling of biological neural systems. Since neural systems are intimately related to cognitive processes and behaviour, the field is closely related to cognitive and behavioural modeling.

The aim of the field is to create models of biological neural systems in order to sympathise how biological systems piece of work. To gain this agreement, neuroscientists strive to brand a link between observed biological processes (data), biologically plausible mechanisms for neural processing and learning (biological neural network models) and theory (statistical learning theory and information theory).

Types of models [edit]

Many models are used; defined at dissimilar levels of brainchild, and modeling unlike aspects of neural systems. They range from models of the short-term behaviour of individual neurons, through models of the dynamics of neural circuitry arising from interactions between individual neurons, to models of behaviour arising from abstract neural modules that represent complete subsystems. These include models of the long-term and brusk-term plasticity of neural systems and its relation to learning and retentiveness, from the private neuron to the system level.

Connectivity [edit]

In August 2020 scientists reported that bi-directional connections, or added appropriate feedback connections, can accelerate and improve communication between and in modular neural networks of the encephalon's cerebral cortex and lower the threshold for their successful communication. They showed that adding feedback connections between a resonance pair tin support successful propagation of a single pulse packet throughout the unabridged network.[24] [25]

Criticism [edit]

Historically, a common criticism of neural networks, specially in robotics, was that they require a large variety of training samples for real-world operation. This is not surprising, since whatsoever learning machine needs sufficient representative examples in order to capture the underlying structure that allows information technology to generalize to new cases. Dean Pomerleau, in his research presented in the newspaper "Noesis-based Training of Bogus Neural Networks for Autonomous Robot Driving," uses a neural network to railroad train a robotic vehicle to bulldoze on multiple types of roads (single lane, multi-lane, dirt, etc.). A large amount of his research is devoted to (one) extrapolating multiple training scenarios from a unmarried training experience, and (2) preserving past training variety so that the system does not become overtrained (if, for example, it is presented with a series of correct turns—it should non learn to always turn right). These issues are common in neural networks that must decide from amongst a wide variety of responses, just can exist dealt with in several ways, for instance by randomly shuffling the training examples, by using a numerical optimization algorithm that does not accept too big steps when changing the network connections following an example, or by grouping examples in so-called mini-batches.

A. K. Dewdney, a former Scientific American columnist, wrote in 1997, "Although neural nets do solve a few toy bug, their powers of computation are so limited that I am surprised anyone takes them seriously every bit a full general trouble-solving tool."[ citation needed ]

Arguments for Dewdney's position are that to implement large and effective software neural networks, much processing and storage resources demand to be committed. While the brain has hardware tailored to the task of processing signals through a graph of neurons, simulating even a almost simplified form on Von Neumann technology may compel a neural network designer to make full many millions of database rows for its connections—which tin can consume vast amounts of estimator retention and data storage capacity. Furthermore, the designer of neural network systems will often demand to simulate the transmission of signals through many of these connections and their associated neurons—which must often be matched with incredible amounts of CPU processing power and time. While neural networks often yield constructive programs, they as well oftentimes do then at the cost of efficiency (they tend to eat considerable amounts of time and coin).

Arguments confronting Dewdney's position are that neural nets have been successfully used to solve many complex and various tasks, such every bit autonomously flying shipping.[26]

Applied science author Roger Bridgman commented on Dewdney'southward statements about neural nets:

Neural networks, for instance, are in the dock not merely because they have been hyped to high heaven, (what hasn't?) just also because y'all could create a successful internet without agreement how information technology worked: the agglomeration of numbers that captures its behaviour would in all probability be "an opaque, unreadable table...valueless as a scientific resource".

In spite of his emphatic annunciation that science is not technology, Dewdney seems here to pillory neural nets as bad science when most of those devising them are just trying to be good engineers. An unreadable table that a useful automobile could read would even so be well worth having.[27]

Although it is true that analyzing what has been learned past an artificial neural network is difficult, it is much easier to do so than to analyze what has been learned by a biological neural network. Moreover, contempo accent on the explainability of AI has contributed towards the development of methods, notably those based on attending mechanisms, for visualizing and explaining learned neural networks. Furthermore, researchers involved in exploring learning algorithms for neural networks are gradually uncovering generic principles that permit a learning auto to be successful. For example, Bengio and LeCun (2007) wrote an article regarding local vs not-local learning, equally well as shallow vs deep architecture.[28]

Some other criticisms came from believers of hybrid models (combining neural networks and symbolic approaches). They advocate the intermix of these two approaches and believe that hybrid models tin improve capture the mechanisms of the human mind (Sunday and Bookman, 1990).[ full citation needed ]

Recent improvements [edit]

While initially enquiry had been concerned mostly with the electrical characteristics of neurons, a specially important part of the investigation in recent years has been the exploration of the role of neuromodulators such every bit dopamine, acetylcholine, and serotonin on behaviour and learning.[ citation needed ]

Biophysical models, such as BCM theory, have been important in understanding mechanisms for synaptic plasticity, and have had applications in both computer science and neuroscience. Inquiry is ongoing in understanding the computational algorithms used in the brain, with some recent biological show for radial ground networks and neural backpropagation every bit mechanisms for processing data.[ commendation needed ]

Computational devices accept been created in CMOS for both biophysical simulation and neuromorphic calculating. More contempo efforts evidence promise for creating nanodevices for very big scale chief components analyses and convolution.[29] If successful, these efforts could usher in a new era of neural computing that is a footstep beyond digital computing,[30] because it depends on learning rather than programming and because information technology is fundamentally analog rather than digital even though the first instantiations may in fact be with CMOS digital devices.

Betwixt 2009 and 2012, the recurrent neural networks and deep feedforward neural networks developed in the research group of Jürgen Schmidhuber at the Swiss AI Lab IDSIA have won 8 international competitions in design recognition and motorcar learning.[31] For example, multi-dimensional long brusk term retentivity (LSTM)[32] [33] won three competitions in connected handwriting recognition at the 2009 International Conference on Document Assay and Recognition (ICDAR), without any prior cognition near the three different languages to be learned.

Variants of the back-propagation algorithm as well every bit unsupervised methods by Geoff Hinton and colleagues at the University of Toronto tin be used to train deep, highly nonlinear neural architectures,[34] similar to the 1980 Neocognitron past Kunihiko Fukushima,[35] and the "standard compages of vision",[36] inspired by the simple and complex cells identified by David H. Hubel and Torsten Wiesel in the primary visual cortex.

Radial basis function and wavelet networks have also been introduced. These can be shown to offer best approximation properties and have been applied in nonlinear organisation identification and classification applications.[22]

Deep learning feedforward networks alternate convolutional layers and max-pooling layers, topped by several pure nomenclature layers. Fast GPU-based implementations of this approach have won several design recognition contests, including the IJCNN 2011 Traffic Sign Recognition Competition[37] and the ISBI 2012 Segmentation of Neuronal Structures in Electron Microscopy Stacks claiming.[38] Such neural networks too were the outset artificial blueprint recognizers to achieve human-competitive or even superhuman performance[39] on benchmarks such as traffic sign recognition (IJCNN 2012), or the MNIST handwritten digits problem of Yann LeCun and colleagues at NYU.

Come across as well [edit]

- ADALINE

- Adaptive resonance theory

- Biological cybernetics

- Biologically inspired calculating

- Cerebellar model articulation controller

- Cognitive architecture

- Cognitive scientific discipline

- Connectomics

- Cultured neuronal networks

- Deep learning

- Deep Image Prior

- Digital morphogenesis

- Efficiently updatable neural network

- Exclusive or

- Evolutionary algorithm

- Genetic algorithm

- Cistron expression programming

- Generative adversarial network

- Group method of data handling

- Habituation

- In situ adaptive tabulation

- Memristor

- Multilinear subspace learning

- Neural network software

- Nonlinear organization identification

- Parallel constraint satisfaction processes

- Parallel distributed processing

- Predictive analytics

- Radial basis role network

- Self-organizing map

- Simulated reality

- Back up vector machine

- Tensor product network

- Time delay neural network

References [edit]

- ^ Hopfield, J. J. (1982). "Neural networks and physical systems with emergent commonage computational abilities". Proc. Natl. Acad. Sci. United states of americaA. 79 (8): 2554–2558. Bibcode:1982PNAS...79.2554H. doi:10.1073/pnas.79.8.2554. PMC346238. PMID 6953413.

- ^ "Neural Cyberspace or Neural Network - Gartner IT Glossary". www.gartner.com.

- ^ Arbib, p.666

- ^ a b c d Bain (1873). Mind and Body: The Theories of Their Relation. New York: D. Appleton and Company.

- ^ a b James (1890). The Principles of Psychology. New York: H. Holt and Company.

- ^ Cuntz, Hermann (2010). "PLoS Computational Biological science Result Image | Vol. 6(viii) Baronial 2010". PLOS Computational Biology. 6 (8): ev06.i08. doi:10.1371/image.pcbi.v06.i08.

- ^ Sherrington, C.South. (1898). "Experiments in Examination of the Peripheral Distribution of the Fibers of the Posterior Roots of Some Spinal Nerves". Proceedings of the Royal Society of London. 190: 45–186. doi:ten.1098/rstb.1898.0002.

- ^ McCulloch, Warren; Walter Pitts (1943). "A Logical Calculus of Ideas Immanent in Nervous Action". Message of Mathematical Biophysics. 5 (iv): 115–133. doi:ten.1007/BF02478259.

- ^ Hebb, Donald (1949). The Organisation of Behavior. New York: Wiley.

- ^ Farley, B.; W.A. Clark (1954). "Simulation of Self-Organizing Systems by Digital Computer". IRE Transactions on Information Theory. four (4): 76–84. doi:ten.1109/TIT.1954.1057468.

- ^ Rochester, Northward.; J.H. Holland, L.H. Habit and W.L. Duda (1956). "Tests on a cell associates theory of the activity of the brain, using a large digital computer". IRE Transactions on Data Theory. 2 (3): 80–93. doi:10.1109/TIT.1956.1056810.

- ^ Rosenblatt, F. (1958). "The Perceptron: A Probalistic Model For Information Storage And Organisation In The Encephalon". Psychological Review. 65 (6): 386–408. CiteSeerXx.1.1.588.3775. doi:x.1037/h0042519. PMID 13602029.

- ^ a b Werbos, P.J. (1975). Across Regression: New Tools for Prediction and Analysis in the Behavioral Sciences.

- ^ Minsky, Yard.; Southward. Papert (1969). An Introduction to Computational Geometry. MIT Press. ISBN978-0-262-63022-1.

- ^ Barth, Peter F. (1981). "Cooperativity and the Transition Behavior of Large Neural Nets". Master of Scientific discipline Thesis. Burlington: University of Vermont: ane–118.

- ^ Krizan, J.E.; Barth, P.F.; Glasser, K.L. (1983). "Verbal Phase Transitions for the Ising Model on the Closed Cayley Tree". Physica. North-The netherlands Publishing Co. 119A: 230–242.

- ^ Glasser, M.50.; Goldberg, M. (1983), "The Ising model on a closed Cayley tree", Physica, 117A: 670–672

- ^ Rumelhart, D.E.; James McClelland (1986). Parallel Distributed Processing: Explorations in the Microstructure of Cognition . Cambridge: MIT Press.

- ^ Russell, Ingrid. "Neural Networks Module". Archived from the original on May 29, 2014.

- ^ McCulloch, Warren; Pitts, Walter (1943). "A Logical Calculus of Ideas Immanent in Nervous Activity". Bulletin of Mathematical Biophysics. 5 (4): 115–133. doi:x.1007/BF02478259.

- ^ Copeland, B. Jack, ed. (2004). The Essential Turing. Oxford University Press. p. 403. ISBN978-0-19-825080-7.

- ^ a b Billings, Due south. A. (2013). Nonlinear System Identification: NARMAX Methods in the Time, Frequency, and Spatio-Temporal Domains. Wiley. ISBN978-1-119-94359-four.

- ^ Wieczorek, Szymon; Filipiak, Dominik; Filipowska, Agata (2018). "Semantic Prototype-Based Profiling of Users' Interests with Neural Networks". Studies on the Semantic Web. 36 (Emerging Topics in Semantic Technologies). doi:x.3233/978-1-61499-894-5-179.

- ^ "Neuroscientists demonstrate how to improve communication betwixt different regions of the brain". medicalxpress.com . Retrieved September half dozen, 2020.

- ^ Rezaei, Hedyeh; Aertsen, Ad; Kumar, Arvind; Valizadeh, Alireza (August 10, 2020). "Facilitating the propagation of spiking activity in feedforward networks by including feedback". PLOS Computational Biology. sixteen (8): e1008033. Bibcode:2020PLSCB..16E8033R. doi:ten.1371/journal.pcbi.1008033. ISSN 1553-7358. PMC7444537. PMID 32776924. S2CID 221100528.

Text and images are bachelor under a Creative Eatables Attribution 4.0 International License.

Text and images are bachelor under a Creative Eatables Attribution 4.0 International License. - ^ Administrator, NASA (June 5, 2013). "Dryden Flight Research Center - News Room: News Releases: NASA NEURAL NETWORK Projection PASSES MILESTONE". NASA.

- ^ "Roger Bridgman's defense force of neural networks". Archived from the original on March 19, 2012. Retrieved August ane, 2006.

- ^ "Scaling Learning Algorithms towards {AI} - LISA - Publications - Aigaion 2.0". world wide web.iro.umontreal.ca.

- ^ Yang, J. J.; et al. (2008). "Memristive switching machinery for metal/oxide/metal nanodevices". Nat. Nanotechnol. 3 (vii): 429–433. doi:ten.1038/nnano.2008.160. PMID 18654568.

- ^ Strukov, D. B.; et al. (2008). "The missing memristor found". Nature. 453 (7191): lxxx–83. Bibcode:2008Natur.453...80S. doi:10.1038/nature06932. PMID 18451858. S2CID 4367148.

- ^ "2012 Kurzweil AI Interview with Jürgen Schmidhuber on the eight competitions won by his Deep Learning squad 2009–2012". Archived from the original on August 31, 2018. Retrieved Dec 10, 2012.

- ^ Graves, Alex; Schmidhuber, Jürgen (2008). "Offline Handwriting Recognition with Multidimensional Recurrent Neural Networks". In Bengio, Yoshua; Schuurmans, Dale; Lafferty, John; Williams, Chris Thou. I.; Culotta, Aron (eds.). Advances in Neural Data Processing Systems 21 (NIPS'21). Vol. 21. Neural Data Processing Systems (NIPS) Foundation. pp. 545–552.

- ^ Graves, A.; Liwicki, M.; Fernandez, South.; Bertolami, R.; Bunke, H.; Schmidhuber, J. (2009). "A Novel Connectionist System for Improved Unconstrained Handwriting Recognition". IEEE Transactions on Blueprint Analysis and Motorcar Intelligence. 31 (5): 855–868. CiteSeerX10.1.1.139.4502. doi:10.1109/TPAMI.2008.137. PMID 19299860. S2CID 14635907.

- ^ Hinton, G. E.; Osindero, S.; Teh, Y. (2006). "A fast learning algorithm for deep belief nets" (PDF). Neural Ciphering. 18 (7): 1527–1554. CiteSeerX10.i.i.76.1541. doi:10.1162/neco.2006.18.7.1527. PMID 16764513. S2CID 2309950.

- ^ Fukushima, K. (1980). "Neocognitron: A self-organizing neural network model for a mechanism of design recognition unaffected by shift in position". Biological Cybernetics. 36 (4): 93–202. doi:10.1007/BF00344251. PMID 7370364. S2CID 206775608.

- ^ Riesenhuber, M.; Poggio, T. (1999). "Hierarchical models of object recognition in cortex". Nature Neuroscience. 2 (eleven): 1019–1025. doi:ten.1038/14819. PMID 10526343. S2CID 8920227.

- ^ D. C. Ciresan, U. Meier, J. Masci, J. Schmidhuber. Multi-Column Deep Neural Network for Traffic Sign Classification. Neural Networks, 2012.

- ^ D. Ciresan, A. Giusti, Fifty. Gambardella, J. Schmidhuber. Deep Neural Networks Segment Neuronal Membranes in Electron Microscopy Images. In Advances in Neural Information Processing Systems (NIPS 2012), Lake Tahoe, 2012.

- ^ D. C. Ciresan, U. Meier, J. Schmidhuber. Multi-cavalcade Deep Neural Networks for Image Classification. IEEE Conf. on Computer Vision and Design Recognition CVPR 2012.

External links [edit]

- A Cursory Introduction to Neural Networks (D. Kriesel) - Illustrated, bilingual manuscript virtually artificial neural networks; Topics so far: Perceptrons, Backpropagation, Radial Ground Functions, Recurrent Neural Networks, Self Organizing Maps, Hopfield Networks.

- Review of Neural Networks in Materials Science

- Artificial Neural Networks Tutorial in 3 languages (Univ. Politécnica de Madrid)

- Another introduction to ANN

- Next Generation of Neural Networks - Google Tech Talks

- Operation of Neural Networks

- Neural Networks and Data

- Sanderson, Grant (October 5, 2017). "Only what is a Neural Network?". 3Blue1Brown. Archived from the original on November vii, 2021 – via YouTube.

4 Types Of Neural Circuits,

Source: https://en.wikipedia.org/wiki/Neural_network

Posted by: bakerboser1959.blogspot.com

0 Response to "4 Types Of Neural Circuits"

Post a Comment